Tag Along With Adler: How This Adler Zooniverse Research Project Got Started

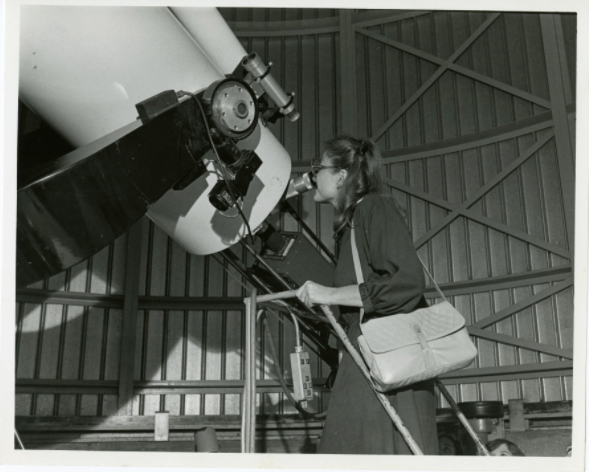

Header Image: A person looking through the Doane Observatory telescope at the Adler Planetarium.

Written By: Former Digital Collections Access Manager Jessica BrodeFrank and Co-Director of Zooniverse, DH Lead. Dr. Samantha Blickhan

Have you ever attempted to search the internet for a specific thing, but found that no matter what you typed in the search bar you couldn’t find what you were looking for? The same problem can happen when searching digital collections! This type of disconnect between search terms and searchable data happens most frequently when the language being used by the searcher differs from the language used by the institution. Even as museums begin to incorporate AI and machine learning into the process of creating descriptive language and keywords for collections materials, much of the vocabulary and word choice differences are being trained right into these programs—continuing to affect the ability to discover! Tag Along with Adler is a new crowdsourced research project from the Adler Planetarium that invites anyone to join us in the curatorial process of describing images of our objects.

Traditionally, museums have focused on cataloging collections in order to record what an object is. This means, for the most part, that collections records focus on information such as who made an object, when it was made, where it was made, and what it is made of. This is all important information to record for future generations, but it misses what the object is about. Many users of online collections are searching for objects based on visual characteristics, such as what is depicted on an object, what does the object mean or do. If this information is missing from the records, it can be difficult or even impossible to find what you’re looking for.

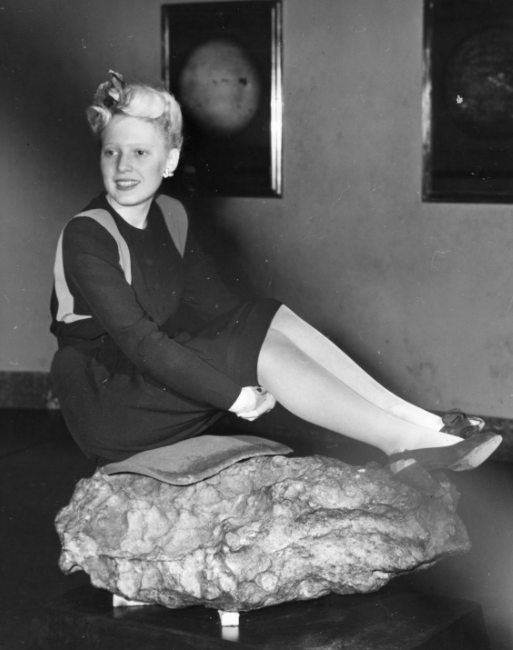

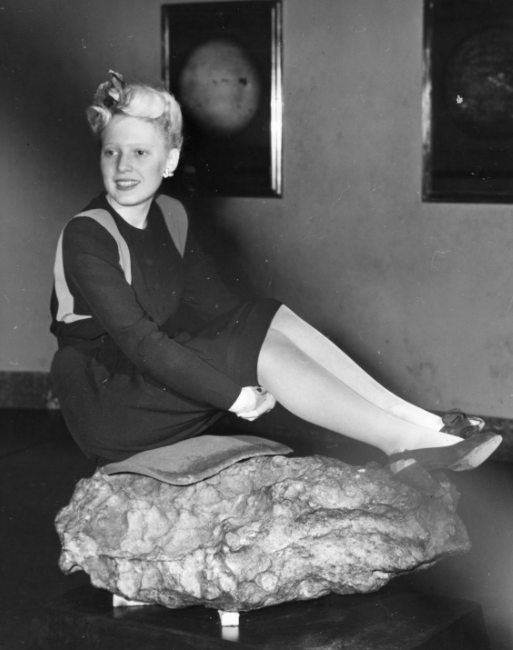

Most museums make their collections searchable online via adding metadata descriptions of their objects. However, the effectiveness of this process (in terms of whether these searches will be successful) depends on how museums are describing their collections. For example, if you saw the image below at the Adler Planetarium and wanted to share it with a friend later, you might pull up the Adler’s digital collection search page and start typing in various terms. What terms would you use? Currently, the terms that would pull this image up in the search results are: woman, meteorite, Chicago Park District, 1945, 1950, Adler Planetarium, Chicago. Perhaps you used one of these, but if you used any other terms like “black and white,” “girl,” “posing,” you wouldn’t find this image!

This is a problem for museum collections across the world, but it was even causing issues internally for staff at the Adler Planetarium too! As digital programming and social media use have increased due to the COVID-19 pandemic, we often want to include collections materials in our digital engagement strategy. In our attempts to search within our own collections based on visual characteristics, we realized it is difficult to even determine what our own options are, based on search results.

Imagine: there is a huge snow storm rolling through Chicago, and you want to share a picture of a time the Adler was “snowed in.” Without the assistance of image tags, which are descriptive words added to the catalogue, featuring words like “snow” or “blizzard,” how do you find the one image of the Adler in the snow amongst the 300+ images which include the Adler building? Without a robust search feature, we have had to rely on staff members looking through the collections manually. If our own staff are having issues with this process, we had to assume these same issues were affecting our guests and online users, too!

How Tag Along With Adler Got Started

I, Jessica BrodeFrank, am the Digital Collections Access Manager at the Adler Planetarium, and for almost 5 years I have helped to manage all the digital collections within our historic collections department, including working with the Collections team to ensure objects are searchable on our online collections search. At the same time, I am a research student working on my PhD at the University of London, School of Advanced Study, specializing in how to increase ease of search and make databases more accessible and inclusive through the use of crowdsourcing.

Along with our Collections and Zooniverse teams, I ran a project called Mapping Historic Skies from November 2019 to February 2021. The project was designed and run as part of a collaboration with the Adler Zooniverse team, including Zooniverse Humanities Lead Dr. Samantha Blickhan (co-author of this blog!), and our former Zooniverse Designer Becky Rother. Through this collaboration, our Collections team was able to witness firsthand the exciting results of using a crowdsourcing platform like Zooniverse to engage with thousands of volunteer citizen scientists. As Mapping Historic Skies was ending, we (Jessica and Samantha) began to consider how crowdsourcing could help the Collections team to further enrich the Adler’s collections database.

Tag Along with Adler is part of my doctoral research, and a result of the ongoing collaborative work between our Adler Zooniverse and Collections teams. Based on previous crowdsourcing projects such as the 2000s run steve.museum, Tag Along with Adler looks to see how inviting volunteers into the traditionally professionally curated process of describing collections can help to not only create engaging experiences for guests, but also create a more representative and diverse set of search terms for collections.

Get Involved With This Research Project

In Tag Along with Adler we are asking you to look at the visual art within our collections of works on paper, rare book illustrations, and historic photographs and add the terms you would use to search for these images. We acknowledge that it is impossible for any one person to anticipate the language of everyone, so we want your help in getting more access points to our data! By becoming a volunteer with this project, you help not only the Adler Collections and Zooniverse teams, but also the very real research project being conducted as part of my doctoral research. Remember: consensus is not the goal! We want your language and participation, helping us revolutionize the way the museum field looks at interactive experiences and cataloging practices.

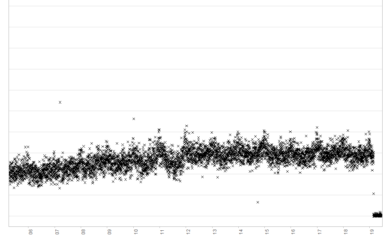

Additionally, this research project introduces volunteers to the work that goes into automating this type of process. A frequent question posed for crowdsourcing projects is, “Why can’t a computer do this?” I ran the project images through two different AI tagging models, one trained by the Metropolitan Museum of Art using 155,531 samples from their collections, and the other trained by the Google Cloud Vision API (the basis for any Google Image search). In the “Verify AI Tags” workflow, volunteers are able to see the tags that these two models created for the Adler collections, and help to verify the suggestions. This allows volunteers to see both the positive outcomes of AI tagging, but also the negative drawbacks and limitations of this technology—essentially, demonstrating that, while automated processes can be helpful, we need to interact with them critically if we want to avoid incorrect or biased results.

Tag Along With Adler’s Impact

Thanks to the dedicated volunteers on Adler Zooniverse, Tag Along with Adler is officially 90% complete, 10 months after its launch. To date, Zooniverse volunteers have completed 20 subject sets in the research project. This accounts for 1990 images from the Adler’s collections between the “Verify AI” and “Tag Images” workflows!

Over 3,500 registered volunteers—including countless unregistered participants—have helped to contribute 99,500 classifications. These classifications created 306,063 tags (terms/words used to describe the collections image). Less than 14% of the tags created in Tag Along with Adler were already available in the Adler’s collections catalogue; and less than 8% of these tags were generated by AI models. This helps support the need for people-powered participation in the cataloguing process, and the importance of this research project in expanding discoverability of collections!

As we continue to run this citizen science project, we look at not only the language and tags being created, but also these ideas of experience and participation. Our teams at the Adler Planetarium and Zooniverse strive to create the most engaging participatory experiences possible; and as we consider how to do this for projects that expose bias and limitations of AI, encourage input from users, and help expand how we describe our collections, we have to look at how users are responding.

Looking back at this image, the Tag Along with Adler project volunteers added the following terms to describe the image: Asteroid, Blonde, Display, exhibit, Exhibition, Girl, meteor, Meteorite, Moon rock, Museum, Planets, Photograph, portrait, pose, Rock, sitting, sitting on, Space rock, vintage, Woman, Young Woman. Already we are seeing that there are now many more options for finding this image!

Our team is enthusiastic about these results; with over 306,000 tags already created that will help improve and diversify the cataloging of our collections. Even more incredible is that these came from over 3,500 individual participants, helping the Adler expand whose voices are included in our collections and changing the way we describe our objects to better serve the public. In this project, consensus is not the goal. Join in and help us enrich access to our collections!

Gamification Of This Citizen Science Research Project

The tags volunteers have added as part of the Tag Along with Adler workflows have been instrumental in not only our research and expansion of the Adler’s catalogue language, but also in the creation of a new video game being used to test gamification and citizen science.

In November 2021, the “Meta Tag Game” launched, which asks volunteers to help the Adler Planetarium tag collections to expand how we describe our objects, and how people like you can find them online. As part of this game you can see how language and word choice may affect your ability to find things.

To begin, you will be shown an image of an object in the Adler’s collection along with all the words that make it searchable online–its metadata! The words fall down over the image, and it’s your job to collect them. Any words that you would use to describe the image you move to the right and any words you don’t think describes the image you move to the left. Some of these terms were created by museum staff, some were created by AI and machine learning, and some were created by Tag Along with Adler Zooniverse volunteers! That’s right—your work on this project is now an integral part of this game.

Not only is this new game an exciting first for the Adler Planetarium’s Collections team, but it is also a new way to use the work of our Zooniverse volunteers, while also allowing volunteers to assist in approving metadata tags.

We need your help testing this early version of the game! Please join in and let us know what you think using the survey after you have played.

The Tag Along team thanks all our Zooniverse volunteers for their time and enthusiasm for this project. We could not design a game like this without your participation. This game allows our Collections team to continue to connect to Adler’s community online, and we can’t wait to see how gamification impacts participation and language choice.

Learn More About Adler Zooniverse

Zooniverse is the world’s largest and most popular platform for people-powered research. This research is made possible by volunteers—more than 2.2 million people around the world who come together to assist professional researchers. In 2020, Zooniverse gained over 263,000 new volunteer citizen scientists. You don’t need any specialized background, training, or expertise to participate in Zooniverse projects. It is easy for anyone to contribute to real academic research, on their own computer, at their own convenience.